Web scraping techniques are revolutionizing how we gather and analyze information from the vast expanse of the internet. In today’s data-driven environment, mastering these methods allows individuals and businesses to extract valuable insights from various online sources. With a plethora of web scraping tools available, understanding how to scrape websites effectively has never been more crucial. From simple data extraction methods to sophisticated frameworks, the options are plentiful, but it’s essential to adhere to ethical web scraping practices to avoid pitfalls. In this article, we will delve into the best practices and tools that can empower you to embark on your web scraping journey with confidence.

In the realm of data collection, automated information retrieval from online sources has gained immense popularity. This practice, often referred to as data mining or content scraping, involves the systematic extraction of data from websites. Various methodologies and software applications facilitate this process, enabling both novices and experts to efficiently gather the information they need. As we explore this topic, we will highlight effective strategies for online data acquisition, including the ethical considerations and technical tools necessary for successful implementation. Understanding these diverse approaches will not only enhance your data collection capabilities but also ensure compliance with legal standards.

Understanding Web Scraping Techniques

Web scraping techniques involve various methods for extracting data from websites. These techniques can range from simple HTTP requests to more complex approaches that employ browser automation. One of the foundational methods is the use of libraries such as Beautiful Soup in Python, which allows users to parse HTML content and extract specific data points. Additionally, developers may utilize APIs provided by websites, which often offer a legal and structured way to gather data without the need for scraping the site directly.

Another common technique in web scraping is the usage of headless browsers like Puppeteer or Selenium. These tools simulate a real user interacting with a website, which is particularly useful for scraping dynamic content that is loaded via JavaScript. By rendering the page as a browser would, these tools can capture data that might not be visible in the raw HTML source. Understanding these various techniques enables users to choose the right approach for their specific scraping needs.

Essential Web Scraping Tools

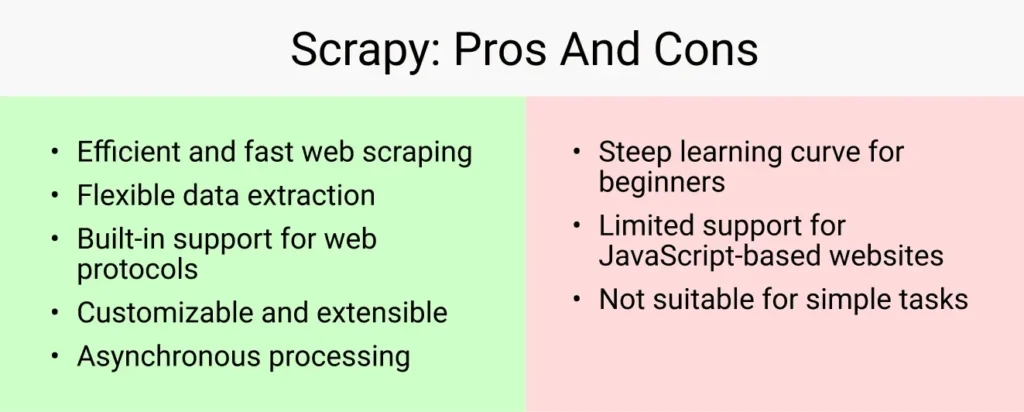

When it comes to web scraping, the selection of the right tools is paramount to success. Popular web scraping tools include Scrapy, a powerful and versatile framework specifically designed for web scraping projects. Scrapy allows users to build spiders that can navigate a website and collect data efficiently. Its built-in functionalities such as data handling and export formats make it a favorite among developers working on large-scale scraping projects.

In addition to Scrapy, another robust tool is Beautiful Soup, which is particularly effective for smaller projects where simplicity is key. With Beautiful Soup, users can easily navigate and search through the parse tree of a web page. This ease of use makes it an excellent choice for beginners who are just starting to learn how to scrape websites. For those requiring more advanced capabilities, tools like Selenium offer the ability to interact with web elements, making them suitable for sites that require user actions to display content.

How to Scrape Websites Effectively

Learning how to scrape websites effectively requires a combination of understanding the target site structure and employing the right tools. The first step is to analyze the website to determine which data needs to be extracted and how it is structured within the HTML. This can often be done using browser developer tools, which allow users to inspect elements and identify the relevant tags containing the desired information.

Once the structure is understood, users can write scripts using languages like Python or JavaScript to automate the data extraction process. It is vital to implement proper error handling and data validation to ensure that the extracted information is accurate and reliable. Additionally, users should be mindful of the scraping frequency to avoid overwhelming the website’s server, which could lead to IP bans or legal repercussions.

Data Extraction Methods for Web Scraping

Data extraction methods in web scraping can vary based on the complexity of the target site and the volume of data needed. One fundamental method is the use of regular expressions to locate and extract specific data patterns from the HTML content. Regular expressions can be particularly powerful in cases where the data is not easily accessible through standard parsing methods.

Another effective method is leveraging APIs when available, as they provide a structured and often easier way to access data without the risks associated with scraping. For instance, many platforms like Twitter or Facebook offer APIs that allow developers to collect data programmatically. This approach not only simplifies the extraction process but also ensures compliance with the platform’s terms of service.

Ethical Web Scraping Practices

Ethical web scraping practices are crucial to maintaining the integrity of the scraping community and avoiding legal issues. One of the fundamental principles is to always check a website’s robots.txt file before scraping. This file indicates which parts of the site are allowed to be scraped and helps to prevent unauthorized access to sensitive data.

Moreover, it’s essential to respect the website’s server load by implementing appropriate scraping intervals and limits on the number of requests made in a given timeframe. Responsible scraping not only helps in maintaining the accessibility of the site for other users but also fosters goodwill between scrapers and website owners.

Web Scraping Best Practices

Following web scraping best practices can significantly enhance the effectiveness and efficiency of your scraping projects. First, it’s advisable to structure your code well and implement logging to keep track of the scraping process. This will help you identify any issues that arise during extraction and improve the overall reliability of your scripts.

Additionally, consider using proxies to distribute requests across multiple IP addresses. This strategy can help avoid detection and potential IP bans from the target website. By following these best practices, you can ensure that your web scraping activities are not only successful but also conducted in a sustainable manner.

The Importance of Web Scraping in Data Analysis

Web scraping plays a pivotal role in data analysis by providing access to vast amounts of information that can be leveraged for insights and decision-making. In today’s data-driven landscape, businesses and researchers rely on web scraping to gather market intelligence, track competitor pricing, and analyze trends in real-time. This capability empowers organizations to make informed decisions based on up-to-date information.

Moreover, web scraping facilitates academic research by allowing scholars to collect data from various online sources for their studies. This method of data collection can significantly enhance the quality of research by providing access to diverse datasets that would otherwise be challenging to compile. As such, the importance of web scraping in data analysis cannot be overstated.

Navigating Legal Challenges in Web Scraping

Legal challenges in web scraping are a significant concern for both individual scrapers and organizations. It is essential to understand the legal implications associated with scraping websites, particularly regarding copyright laws and terms of service agreements. Many websites explicitly prohibit scraping in their terms, and ignoring these stipulations can lead to legal actions against the scraper.

To navigate these challenges, it is advisable to consult legal experts when engaging in large-scale scraping projects. Additionally, developing a clear understanding of the laws governing data collection in your jurisdiction can help mitigate risks. By adhering to legal standards and ethical guidelines, you can conduct web scraping activities with greater confidence.

Future Trends in Web Scraping Technologies

The future of web scraping technologies is poised for exciting advancements, driven by the increasing demand for data and the evolution of web technologies. As websites become more dynamic and complex, the tools and methods for scraping data will also evolve. Innovations in artificial intelligence and machine learning are expected to enhance web scraping capabilities, allowing for more sophisticated data extraction techniques.

Additionally, the rise of headless browsers and automated scraping frameworks will continue to simplify the process of data extraction. This will enable users with limited technical skills to access valuable information. As the landscape of web data continues to grow, staying abreast of these trends will be crucial for anyone involved in web scraping.

Frequently Asked Questions

What are the best web scraping techniques for beginners?

For beginners, the best web scraping techniques include using tools like Beautiful Soup for HTML parsing, Scrapy for larger projects, and Selenium for dynamic websites. These tools simplify data extraction and allow users to focus on learning the fundamentals of web scraping.

How do I choose the right web scraping tools for my project?

Choosing the right web scraping tools depends on your project’s needs. If you require simple data extraction, Beautiful Soup may suffice. For complex tasks involving dynamic content, consider using Selenium or Puppeteer. Scrapy is ideal for larger-scale scraping projects.

What are the ethical web scraping practices to follow?

Ethical web scraping practices include respecting the website’s robots.txt file, limiting the frequency of requests to avoid overloading servers, and ensuring compliance with legal regulations. Always seek permission when necessary and avoid scraping sensitive data.

What are some common data extraction methods in web scraping?

Common data extraction methods in web scraping include HTML parsing, using APIs, and web automation. HTML parsing is done with libraries like Beautiful Soup, while APIs provide structured data. Web automation tools like Selenium simulate user interaction for dynamic content extraction.

How can I improve my web scraping skills quickly?

To improve your web scraping skills quickly, practice by working on small projects, utilize online tutorials, and join web scraping communities. Familiarize yourself with popular libraries and tools, and try to scrape different types of data from various websites to enhance your expertise.

What are the legal considerations when using web scraping techniques?

When using web scraping techniques, it’s important to understand legal considerations such as copyright laws, terms of service of the websites, and data protection regulations like GDPR. Always review the website’s policies and seek legal advice if unsure about scraping certain data.

Can I scrape data from any website using web scraping tools?

While web scraping tools can technically be used to extract data from most websites, not all websites allow scraping. It’s crucial to check the site’s robots.txt file and terms of service to ensure compliance and avoid potential legal issues.

What are the best practices for effective web scraping?

Best practices for effective web scraping include respecting robots.txt, managing request rates to prevent server overload, using user-agent rotation, and handling errors gracefully. Additionally, storing scraped data securely and maintaining a clear code structure will enhance your scraping projects.

| Key Point | Description |

|---|---|

| Definition | Web scraping is the automated process of collecting information from websites. |

| Importance | Web scraping is essential in a data-driven world for extracting valuable insights. |

| Common Tools | Popular tools for web scraping include Beautiful Soup, Scrapy, Selenium, and Puppeteer. |

| Programming Languages | Languages like Python, R, and JavaScript are commonly used for web scraping. |

| Ethical Guidelines | Always follow ethical guidelines and respect robots.txt files to avoid legal issues. |

| Applications | Web scraping can be used for academic research, competitive analysis, and data analytics. |

Summary

Web scraping techniques are vital for anyone looking to harness the power of online data. By employing various methods and tools, individuals and organizations can extract meaningful information from websites, enabling informed decision-making. Understanding the different web scraping methods, ethical considerations, and available tools can significantly enhance your data analysis capabilities. As the demand for data continues to grow, mastering web scraping techniques will undoubtedly give you a competitive edge in your field. Happy scraping!