Web scraping techniques have become essential tools for anyone looking to extract valuable data from the vast expanse of the internet. With the right data extraction tools, users can efficiently gather information from various websites, allowing businesses and researchers to gain insights that drive decision-making. In this article, we will delve into how to scrape websites effectively, highlighting best web scraping practices that ensure compliance and efficiency. We will provide a comprehensive beautiful soup tutorial alongside a guide for using Scrapy for beginners, making it easier for readers to implement these powerful techniques. By mastering these skills, you’ll be well-equipped to navigate the challenges of data gathering in today’s data-driven landscape.

When discussing methods for gathering information online, various terms such as data harvesting and web data extraction come to mind. These strategies are pivotal for those looking to acquire structured data from unstructured web sources. Throughout this article, we will explore several effective methods and approaches that enable users to streamline their data collection processes. By focusing on the best practices for web scraping, readers will learn how to manage and store their findings efficiently. Whether you are a novice in programming or an experienced developer, the tools and techniques discussed here will enhance your ability to extract actionable insights from the web.

Understanding Web Scraping Techniques

Web scraping techniques are essential for extracting valuable information from websites efficiently. These methods can range from simple HTML parsing to more complex approaches that involve using APIs or handling JavaScript-rendered content. Knowing how to scrape websites effectively is crucial for anyone looking to gather data from multiple sources. A solid foundation in web scraping techniques can enable businesses to make informed decisions based on real-time data analysis.

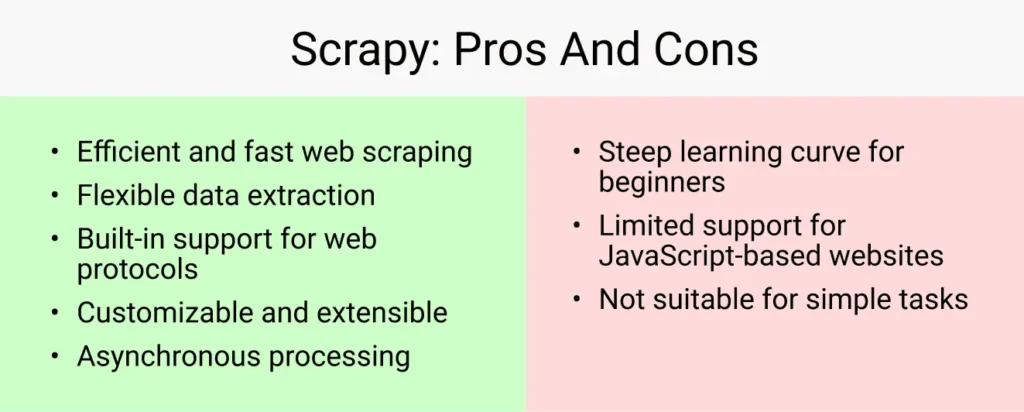

Additionally, there are various tools available for web scraping, each with its own set of features and capabilities. Some of the most popular tools include Beautiful Soup and Scrapy, both of which are Python libraries designed to simplify the web scraping process. Understanding the strengths and weaknesses of these tools is vital in selecting the right one for your specific scraping needs.

Choosing the Right Data Extraction Tools

When embarking on a web scraping project, selecting the right data extraction tools is paramount. There are numerous options available, ranging from browser extensions to dedicated software applications. Tools like Beautiful Soup are great for beginners due to their user-friendly syntax and comprehensive documentation. On the other hand, Scrapy might be more suitable for advanced users looking to build complex scraping frameworks with greater efficiency.

Moreover, the choice of tools can significantly impact the quality and speed of data extraction. It’s important to consider factors like the volume of data you wish to collect and the structure of the target website. Effective data extraction tools can help streamline the scraping process, making it easier to automate tasks and manage large datasets.

Best Web Scraping Practices for Efficiency

Implementing best web scraping practices is crucial for ensuring efficiency and reliability in your data collection efforts. One key practice is to respect the website’s robots.txt file, which outlines the site’s scraping policies. Adhering to these guidelines not only helps avoid potential legal issues but also fosters goodwill with the website owners.

Additionally, it’s advisable to implement rate limiting to prevent overwhelming the target server with requests. This not only minimizes the risk of being blocked but also ensures that your scraping activities are ethical and sustainable. Always remember that the aim is to gather data in a manner that is respectful and compliant with the website’s terms of service.

Handling Websites with Anti-Scraping Measures

Many websites employ anti-scraping measures to protect their data from unauthorized extraction. Understanding how to navigate these barriers is critical for successful web scraping. Techniques such as rotating user agents, using proxies, and employing headless browsers can help bypass some of these restrictions. For instance, rotating user agents can make it appear as though requests are coming from different browsers, reducing the likelihood of being detected as a bot.

Furthermore, it’s important to stay updated on the latest anti-scraping technologies employed by websites. Some may use CAPTCHAs or JavaScript challenges that require additional handling. Being prepared with strategies to tackle these obstacles can save time and effort in the long run.

Storing and Managing Scraped Data Effectively

Once data has been successfully scraped, the next step is managing and storing this information effectively. Choosing the right database or storage solution is critical, as it directly impacts how easily you can access and analyze the data later. Options include traditional SQL databases, NoSQL databases for unstructured data, or even simple CSV files, depending on the complexity and size of your dataset.

Moreover, organizing scraped data in a logical structure can facilitate smoother analysis and reporting. Implementing a clear naming convention for files and utilizing metadata can help in efficiently retrieving and processing the data when needed. Proper data management practices are essential for maximizing the value derived from your web scraping efforts.

Getting Started with Beautiful Soup Tutorial

Beautiful Soup is a powerful library in Python that simplifies the process of web scraping. Its intuitive syntax allows users to navigate through HTML and XML documents easily. To get started with Beautiful Soup, it’s essential to set up your Python environment and install the library via pip. Once installed, you can begin by importing the library and using it to parse HTML content retrieved from a webpage.

A basic Beautiful Soup tutorial would involve fetching a webpage using the requests library, parsing the HTML content with Beautiful Soup, and then extracting the desired data. For example, you could extract all the headings or links from a webpage using simple commands, making it an excellent choice for beginners looking to dive into web scraping.

Scrapy for Beginners: Building Your First Web Scraper

Scrapy is an open-source and powerful web scraping framework that allows users to build robust web scrapers quickly. For beginners, getting started with Scrapy involves installing the framework via pip and creating a new Scrapy project. The framework provides a structured way to define spiders, which are classes that contain the logic for scraping specific websites.

A typical Scrapy project setup includes defining item classes for the data you want to collect, writing spiders to extract that data, and utilizing Scrapy’s built-in features like item pipelines for data processing. By following tutorials and documentation, even novice users can create their first web scraper in a short amount of time and gradually expand its capabilities.

Ethical Considerations in Web Scraping

As web scraping becomes increasingly popular, it’s essential to consider the ethical implications of this practice. Respecting the terms of service of the websites you are scraping is crucial for maintaining ethical standards. Many websites explicitly state their policies regarding data extraction, and violating these terms can lead to legal consequences.

Moreover, ethical scraping practices include being transparent about your intentions and giving credit when necessary. If your scraping efforts contribute to research or data analysis, acknowledging the original sources can foster a positive relationship with content creators and website owners.

The Future of Web Scraping in the Age of Big Data

With the rise of big data, web scraping has become an invaluable tool for businesses and researchers alike. The ability to collect vast amounts of data from various online sources allows organizations to gain insights and make data-driven decisions. As more data is generated online, the demand for efficient web scraping techniques will only continue to grow.

Looking towards the future, advancements in machine learning and artificial intelligence may further enhance the capabilities of web scraping tools, making them more sophisticated and easier to use. As technology evolves, staying informed about the latest trends and tools in web scraping will be essential for anyone looking to harness the power of data.

Frequently Asked Questions

What are the best web scraping practices for beginners?

When starting with web scraping, it’s essential to follow best practices to ensure efficiency and compliance. First, choose the right data extraction tools, such as Beautiful Soup or Scrapy, based on your needs. Always respect the website’s terms of service and robots.txt file to avoid legal issues. Additionally, implement error handling in your script to manage unexpected website changes and ensure your scraper runs smoothly.

How can I scrape websites with anti-scraping measures?

Scraping websites with anti-scraping measures requires strategic techniques. Begin by using user-agent rotation to mimic different browsers, which can help bypass basic restrictions. Implementing delays between requests can also reduce the risk of being blocked. Tools like Scrapy provide middlewares that can assist in managing these tactics effectively. Additionally, consider using proxies to mask your IP address and spread out the scraping load.

What is a Beautiful Soup tutorial for beginners looking to scrape data?

A Beautiful Soup tutorial for beginners typically covers the following steps: First, install Beautiful Soup with pip and import it alongside requests to fetch HTML content. Next, parse the HTML using Beautiful Soup’s ‘BeautifulSoup’ class. You can then navigate and search the parse tree using methods like ‘find()’ and ‘find_all()’ to extract the desired data. The tutorial often includes example code snippets to demonstrate these techniques in action.

Why is selecting the right scraping tool important for effective data extraction?

Selecting the right scraping tool is crucial for effective data extraction because different tools offer varied functionalities suited to specific tasks. For instance, Beautiful Soup is excellent for parsing HTML and navigating complex document structures, while Scrapy is more powerful for large-scale scraping projects with its built-in features for handling requests and managing scraped data. The right tool enhances efficiency and can simplify the complexities of web scraping.

What are some effective data extraction tools for web scraping?

Effective data extraction tools for web scraping include Beautiful Soup, Scrapy, Selenium, and Puppeteer. Beautiful Soup is ideal for beginners due to its straightforward syntax for parsing HTML. Scrapy, on the other hand, is a robust framework for larger projects, offering features for managing data pipelines. Selenium is useful for scraping dynamic content generated by JavaScript, while Puppeteer provides a headless browser environment for complex scraping tasks.

How can I manage and store scraped data effectively?

Managing and storing scraped data effectively involves choosing the right format and storage solution. Consider using CSV or JSON for easy data manipulation and readability. For larger datasets, databases like SQLite or MongoDB are beneficial for structured storage and querying. Additionally, implement data cleaning and validation processes to ensure the quality of your scraped data before storing it.

What ethical considerations should I keep in mind when using web scraping techniques?

When using web scraping techniques, it’s vital to adhere to ethical considerations. Always check the website’s terms of service to understand their stance on scraping. Avoid scraping sensitive or personal data without consent, and ensure that your scraping activities do not overload the website’s server. Practicing responsible scraping helps maintain the integrity of web resources and fosters goodwill within the web community.

| Key Point | Description |

|---|---|

| Selecting the Right Scraping Tool | Choosing the appropriate tool is critical for successful web scraping, impacting efficiency and effectiveness. |

| Handling Anti-Scraping Measures | Techniques to bypass or deal with measures that websites put in place to prevent scraping. |

| Best Practices for Data Management | Strategies to efficiently store and manage the data collected from web scraping activities. |

| Code Snippets for Beginners | Simple examples using Python libraries like Beautiful Soup and Scrapy to help users get started. |

| Ethical Considerations | Discussion on the importance of adhering to website terms of service while scraping. |

Summary

Web scraping techniques are essential for extracting data from various online sources effectively. This article dives into significant aspects of web scraping, such as the importance of the right tools, handling anti-scraping measures, and best practices for data management. By understanding and applying these web scraping techniques, both businesses and researchers can leverage vast amounts of information available online while maintaining ethical considerations in their scraping practices.