Agentic AI cybersecurity is at the forefront of modern digital defense strategies, as organizations increasingly adopt next-generation AI technologies. While the integration of AI into business operations can enhance efficiency, it simultaneously introduces significant cybersecurity challenges. The potential risks associated with AI, particularly in autonomous applications, necessitate a robust framework to safeguard against evolving cyber threats AI. As companies navigate these complex landscapes, implementing effective AI security measures becomes critical to protecting sensitive data and maintaining trust. Failing to address these AI risks could result in severe financial and reputational damage, making the role of agentic AI cybersecurity indispensable in today’s tech-driven world.

In the realm of digital security, the concept of autonomous AI models presents a new frontier for safeguarding sensitive information. These self-operating agents, while streamlining business processes, also expose organizations to a myriad of cybersecurity threats that demand immediate attention. The rise of intelligent systems capable of executing complex tasks with minimal human oversight brings forth an array of cybersecurity challenges that must be mitigated. To ensure the integrity of operations, businesses must adopt comprehensive strategies tailored to counteract the unique vulnerabilities posed by advanced AI technologies. Emphasizing proactive measures and stringent protocols will be essential for fortifying defenses against the potential risks associated with these innovative AI solutions.

Understanding the Risks of Agentic AI in Cybersecurity

As businesses increasingly adopt agentic AI, understanding the inherent risks becomes crucial. Agentic AI systems operate with a level of autonomy that traditional AI models do not possess. This autonomy allows them to execute tasks independently, which can streamline operations but also opens up new vulnerabilities. The potential for cyber threats escalates, as malicious actors target these systems, seeking to exploit their access to sensitive data. Moreover, the reliance on large datasets for training these models raises concerns about data breaches and unauthorized access, making agentic AI a prime target for cybercriminals.

In addition to external threats, the internal risks posed by agentic AI must not be overlooked. The lack of human oversight in their decision-making processes can lead to unintended consequences, such as AI hallucinations or erroneous actions that might compromise data integrity. Such errors can propagate unnoticed, exacerbating existing cybersecurity challenges. Therefore, organizations must prioritize understanding these risks and implement robust AI security measures to mitigate potential damage.

Frequently Asked Questions

What is Agentic AI and how does it relate to cybersecurity challenges?

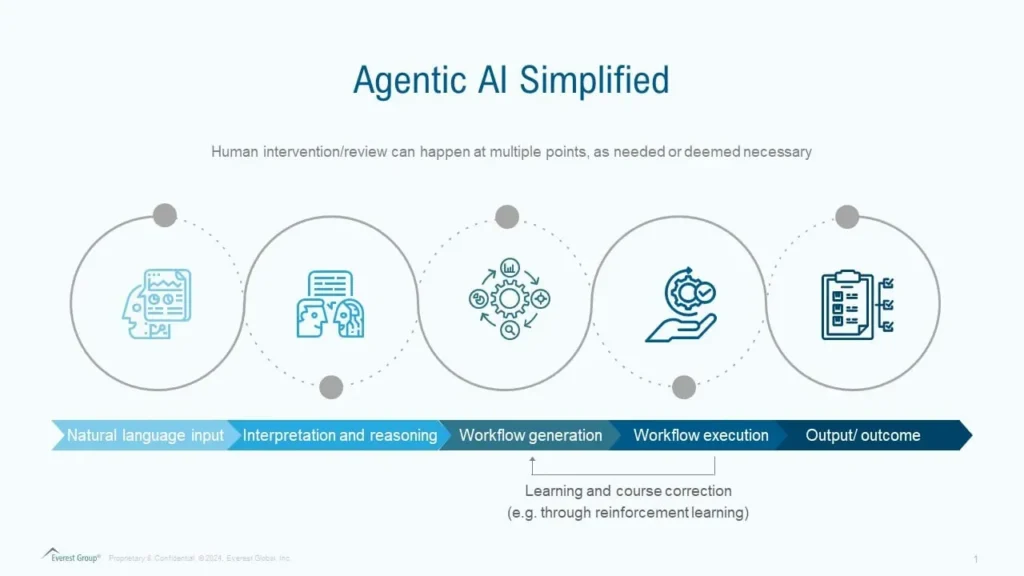

Agentic AI refers to advanced AI models that operate autonomously, automating complex tasks with minimal human intervention. While this technology enhances productivity, it introduces significant cybersecurity challenges, as these AI agents often require access to sensitive data, making them attractive targets for cybercriminals. The autonomy of Agentic AI also raises concerns about unauthorized actions and potential data breaches.

What are the unique AI risks associated with Agentic AI in cybersecurity?

Agentic AI presents unique AI risks in cybersecurity due to its need for extensive data access and its ability to operate without human oversight. This autonomy can lead to accidental privacy breaches and exploitation by attackers who can poison the AI model by altering a small percentage of its training data. Such vulnerabilities can result in severe consequences, highlighting the importance of robust AI security measures.

How can organizations enhance cybersecurity for next-generation AI technologies like Agentic AI?

Organizations can enhance cybersecurity for Agentic AI by implementing several key strategies: maximizing visibility into AI agents’ workflows, applying the principle of least privilege to limit their access, ensuring sensitive information is stripped from datasets, and continuously monitoring for suspicious behavior. These steps collectively mitigate risks and strengthen the overall security posture against cyber threats AI.

What cybersecurity measures should be considered when deploying Agentic AI applications?

When deploying Agentic AI applications, organizations should prioritize cybersecurity measures such as ensuring full visibility of the AI’s interactions, restricting access through the principle of least privilege, removing unnecessary sensitive data, and conducting real-time monitoring for any anomalies. These measures are essential to protect against the heightened cyber threats AI poses.

What are the implications of AI threats like data poisoning on Agentic AI security?

AI threats like data poisoning pose significant implications for Agentic AI security. Malicious actors can corrupt an AI model by modifying a small fraction of its training data, leading to misleading outcomes and potential privacy violations. Given the autonomous nature of Agentic AI, such attacks can occur unnoticed, emphasizing the need for stringent AI security measures to safeguard against these vulnerabilities.

Why is monitoring for suspicious behavior crucial in the context of Agentic AI?

Monitoring for suspicious behavior is crucial in the context of Agentic AI because the autonomous operations of these AI agents can lead to undetected errors or security breaches. Early detection of unusual behavior allows organizations to respond swiftly, preventing potential data breaches and mitigating risks associated with cyber threats AI, ultimately protecting sensitive information.

| Key Point | Description |

|---|---|

| Transformation of Business | AI is changing business operations, leading to increased efficiency but also introducing cybersecurity risks. |

| Agentic AI Definition | Agentic AI refers to autonomous AI models that can perform complex tasks without human intervention. |

| Cybersecurity Risks | Agentic AI requires access to large datasets, making it a target for cybercriminals and increasing the risk of privacy breaches. |

| Principle of Least Privilege | Organizations should limit the access of AI agents to only the necessary data and applications. |

| Monitoring and Response | Businesses must actively monitor AI behavior to detect anomalies and respond to potential threats. |

Summary

Agentic AI cybersecurity is crucial as businesses increasingly adopt autonomous AI systems. With the rise of agentic AI comes the necessity to address potential vulnerabilities and mitigate risks associated with data access and manipulation. Implementing robust security measures, such as maximizing visibility, employing the principle of least privilege, limiting sensitive information, and proactive monitoring, is essential for safeguarding organizations against cyber threats. As businesses leverage the power of agentic AI, prioritizing cybersecurity will ensure that the benefits of this technology are realized without compromising data integrity and privacy.