Agentic AI is revolutionizing the landscape of artificial intelligence by enabling autonomous AI agents to perform tasks with minimal human intervention. This innovative technology is not only enhancing operational efficiency but also introducing a host of AI cybersecurity risks that organizations must address. As businesses increasingly rely on these advanced systems for functions such as data analysis and customer service, the potential vulnerabilities from machine learning and data privacy issues become more pronounced. Cybersecurity strategies for AI are essential to mitigate these threats, as the integration of agentic AI can expose sensitive information and lead to significant breaches. Understanding the implications of autonomous AI agents is crucial for companies aiming to harness the benefits of this technology while safeguarding their data.

Autonomous artificial intelligence, often referred to as agentic AI, is transforming how organizations operate by allowing AI systems to function independently. This evolution in machine learning capabilities empowers AI agents to tackle complex tasks without direct human oversight, leading to increased productivity. However, the rise of such systems brings about critical challenges, particularly in the realm of AI data privacy and cybersecurity. With these intelligent agents requiring access to vast datasets, they become prime targets for cyber threats, necessitating robust cybersecurity strategies tailored to address potential machine learning vulnerabilities. As businesses explore the benefits of these advanced technologies, understanding their implications on security becomes paramount.

Understanding Agentic AI and Its Impact on Cybersecurity

Agentic AI is revolutionizing industries by automating complex tasks that traditionally required human oversight. By leveraging advanced machine learning and natural language processing, these autonomous agents can perform multi-step tasks efficiently, from handling customer inquiries to conducting detailed data analyses. However, the autonomous nature of agentic AI introduces a new set of cybersecurity challenges that organizations must navigate carefully. As AI technologies become increasingly integrated into business operations, understanding the implications of agentic AI on cybersecurity is crucial for mitigating risks.

The rapid adoption of agentic AI models is predicted to reach significant milestones in the coming years, with Gartner estimating that a substantial portion of generative AI interactions will involve these agents. Nevertheless, this growth comes with heightened cybersecurity concerns. The reliance on large datasets for training AI models makes them attractive targets for cybercriminals, who can exploit vulnerabilities to gain access to sensitive information. As agentic AI continues to evolve, it is essential for organizations to proactively address these cybersecurity risks and develop robust strategies to protect their data.

Cybersecurity Risks Associated with Agentic AI

The integration of agentic AI in business processes raises several cybersecurity risks, primarily due to the autonomous nature of these systems. Unlike traditional AI applications that require human intervention, agentic AI can operate independently, which can lead to unauthorized access to sensitive data. For instance, if an AI agent is compromised, attackers can exploit its access to databases, potentially leading to significant data breaches that can cost organizations millions. This scenario highlights the importance of understanding AI cybersecurity risks and implementing comprehensive security measures to safeguard against potential threats.

Another critical risk associated with agentic AI is the potential for machine learning vulnerabilities. These vulnerabilities can arise from issues such as data poisoning, where malicious entities manipulate the training data to influence the AI’s decision-making process. The consequences of such attacks can be devastating, as flawed AI outputs can lead to erroneous conclusions and actions that could have severe ramifications for businesses. Therefore, it is crucial for organizations to develop robust cybersecurity strategies for AI, focusing on securing data integrity and ensuring that agentic AI operates within defined parameters.

Implementing Effective Cybersecurity Strategies for Agentic AI

To effectively combat the cybersecurity risks associated with agentic AI, organizations must adopt a comprehensive approach to their cybersecurity strategies. One of the foundational elements of this approach is maximizing visibility into AI workflows. This entails ensuring that security teams have full transparency regarding an AI agent’s operations, including the data it can access and the applications it interacts with. By utilizing advanced network mapping tools and maintaining clear records of AI interactions, businesses can better identify potential vulnerabilities and take proactive measures to mitigate them.

In addition to enhancing visibility, employing the principle of least privilege is essential for minimizing cybersecurity risks. This principle mandates that AI agents should only have access to the resources that are necessary for their specific tasks. By limiting permissions and restricting access to sensitive databases or applications, organizations can significantly reduce their attack surface. This strategic approach not only protects valuable information but also prevents lateral movement by cybercriminals, thereby fortifying the overall security posture of the organization.

Data Privacy Concerns with AI Agents

Data privacy is a paramount concern when deploying agentic AI, particularly in scenarios where sensitive information is involved. AI agents often handle large volumes of data, including personally identifiable information (PII), which makes them attractive targets for cyberattacks. To mitigate the risks of privacy violations, organizations must implement strict data governance policies that dictate what information AI agents can access. By stripping unnecessary sensitive data from training datasets, businesses can protect customer privacy and comply with regulations such as GDPR.

Furthermore, organizations should adopt data anonymization techniques to ensure that even if a breach occurs, the exposed information does not compromise individual privacy. This proactive approach not only safeguards customer data but also builds trust with clients and stakeholders. By prioritizing data privacy in their AI strategies, businesses can foster a secure environment that encourages the responsible use of agentic AI, thereby maximizing its benefits while minimizing potential risks.

Monitoring and Oversight of AI Agents

Monitoring agentic AI should be a continuous process that involves real-time oversight of its operations. Given the autonomous nature of these systems, it is crucial for organizations to implement monitoring tools that can detect unusual behavior and flag potential security threats. By rolling out AI agents in small, controlled environments, businesses can closely observe their performance and identify any biases or anomalies before wider deployment. This measured approach allows for the timely correction of issues and helps maintain the integrity of AI systems.

In addition to initial monitoring, organizations must establish a framework for ongoing oversight of AI agents. Regular audits and assessments will ensure that these systems operate within predetermined safety parameters and adhere to established compliance standards. The use of automated detection and response solutions can significantly enhance an organization’s ability to respond to security incidents quickly, thus minimizing potential damages. By fostering a culture of vigilance and continuous improvement, businesses can better protect their AI investments and ensure that agentic AI contributes positively to their operations.

The Future of AI and Cybersecurity

The future of AI is promising, but it also presents significant cybersecurity challenges that organizations must address. As agentic AI continues to evolve, so too must the strategies employed to protect it. The rise of autonomous AI agents necessitates a shift in the approach to cybersecurity, with a focus on proactive measures that anticipate potential threats. Failure to keep pace with these changes could result in severe consequences, including financial losses and reputational damage.

Moreover, as AI technologies become more prevalent in various sectors, the landscape of cybersecurity will be fundamentally transformed. Organizations will need to invest in advanced security solutions that leverage machine learning and artificial intelligence to predict and mitigate risks more effectively. By embracing a forward-thinking approach to cybersecurity, businesses can harness the full potential of agentic AI while safeguarding against the vulnerabilities that accompany its deployment.

Challenges in Securing AI Systems

Securing AI systems, particularly agentic AI, presents unique challenges that organizations must navigate. One significant challenge is the fast-paced nature of AI technology, which often outstrips existing cybersecurity measures. As AI models become more sophisticated, attackers are also becoming increasingly adept at exploiting their vulnerabilities. This ongoing arms race means that organizations must remain vigilant and continuously adapt their security strategies to counter new threats effectively.

Additionally, the complexity of AI systems can make it difficult for organizations to identify and address vulnerabilities. Many AI models operate as black boxes, making it challenging to understand how they make decisions or identify potential weaknesses. This lack of transparency can hinder efforts to implement effective cybersecurity measures. To overcome this challenge, organizations should prioritize explainable AI initiatives that provide insight into AI decision-making processes, thus enabling more effective risk management.

The Role of Human Oversight in AI Security

Despite the autonomous nature of agentic AI, human oversight remains a critical component of AI security. Organizations must ensure that skilled professionals are involved in the development, deployment, and monitoring of AI systems. Human experts can provide valuable insights into potential vulnerabilities and help establish security protocols that align with best practices. By fostering collaboration between AI technology and human expertise, organizations can create a robust security framework that minimizes risks.

Moreover, ongoing training and education for employees involved in AI operations are essential for maintaining security. As AI technologies evolve, so too must the knowledge base of the workforce. Organizations should invest in training programs that equip employees with the skills needed to identify and respond to potential security threats. This proactive approach not only enhances the security posture of the organization but also empowers employees to take ownership of their role in safeguarding AI systems.

Navigating Regulatory Compliance in AI Cybersecurity

As AI technologies proliferate, so do the regulations governing their use, particularly concerning cybersecurity and data privacy. Organizations deploying agentic AI must navigate a complex landscape of regulations to ensure compliance and protect sensitive information. Understanding the legal implications of AI deployment is crucial for mitigating risks and avoiding potential penalties. This includes staying informed about evolving regulations such as GDPR, CCPA, and industry-specific standards.

To effectively navigate regulatory compliance, organizations should establish a comprehensive compliance framework that outlines their obligations regarding AI data privacy and security. This framework should include regular audits, risk assessments, and documentation of compliance efforts. By prioritizing regulatory compliance in their AI strategies, businesses can not only protect themselves from legal repercussions but also build trust with customers and stakeholders by demonstrating a commitment to ethical AI practices.

Frequently Asked Questions

What are the cybersecurity risks associated with agentic AI?

Agentic AI poses unique cybersecurity risks due to its autonomous nature and the vast amounts of data it accesses. Cybercriminals target these AI agents to exploit vulnerabilities, potentially leading to significant data breaches. Additionally, the lack of human oversight in agentic AI operations increases the risk of privacy violations and AI hallucinations, which can result in incorrect conclusions based on corrupted datasets.

How can organizations enhance cybersecurity for autonomous AI agents?

Organizations can enhance cybersecurity for autonomous AI agents by maximizing visibility into their workflows, employing the principle of least privilege to limit access, stripping sensitive information from datasets, and monitoring for suspicious behavior. These strategies help mitigate risks and protect AI systems from potential cyber threats.

What is the principle of least privilege in the context of agentic AI?

The principle of least privilege states that any AI agent should only have access to the resources necessary to perform its functions. By minimizing permissions, organizations can reduce the attack surface and prevent unauthorized access to sensitive information, thereby enhancing the cybersecurity posture of their autonomous AI agents.

What are some common machine learning vulnerabilities in agentic AI?

Common machine learning vulnerabilities in agentic AI include data poisoning, where attackers modify training datasets to corrupt model outputs, and privacy violations due to the mishandling of sensitive data. These vulnerabilities underscore the need for robust cybersecurity measures when deploying agentic AI applications.

How does AI data privacy relate to agentic AI?

AI data privacy is critical for agentic AI as these systems often handle large amounts of sensitive information. Ensuring that agentic AI does not retain unnecessary personally identifiable information and implementing strict data access controls can help mitigate privacy risks and protect user data from breaches.

What strategies can businesses implement to monitor agentic AI for suspicious behavior?

Businesses can implement several strategies to monitor agentic AI for suspicious behavior, including gradual deployment of AI agents, real-time monitoring for anomalies, and utilizing automated detection and response solutions. These measures allow organizations to swiftly identify and address potential cybersecurity threats.

Why is it important to limit sensitive information in datasets used by agentic AI?

Limiting sensitive information in datasets used by agentic AI is important to prevent privacy violations and minimize the impact of data breaches. By removing unnecessary personally identifiable information, organizations can reduce risks associated with unauthorized access and ensure compliance with data protection regulations.

What role does visibility play in securing autonomous AI agents?

Visibility plays a crucial role in securing autonomous AI agents as it allows security and operations teams to understand the AI’s workflows, data access points, and interactions with other systems. This knowledge is essential for identifying vulnerabilities and ensuring that appropriate security measures are in place.

What actions should organizations take before deploying agentic AI applications?

Before deploying agentic AI applications, organizations should assess their cybersecurity strategies, maximize visibility into AI workflows, limit access through the principle of least privilege, strip sensitive information from datasets, and establish monitoring protocols for suspicious behavior. These actions help ensure a secure deployment of AI technology.

How can businesses prepare for the evolving cybersecurity landscape of agentic AI?

Businesses can prepare for the evolving cybersecurity landscape of agentic AI by staying informed about emerging threats, continuously updating their cybersecurity strategies, investing in advanced detection tools, and fostering a culture of security awareness among employees. Proactive measures will help mitigate risks associated with the adoption of agentic AI.

| Key Point | Explanation |

|---|---|

| Transformation of Businesses | AI is changing business operations, promising efficiency and innovation. |

| Agentic AI Definition | Agentic AI operates autonomously, automating tasks with minimal human involvement. |

| Cybersecurity Risks | Agentic AI presents unique cybersecurity challenges, becoming targets for cybercriminals. |

| Need for Enhanced Security | Businesses must evolve their cybersecurity strategies to protect against AI-related vulnerabilities. |

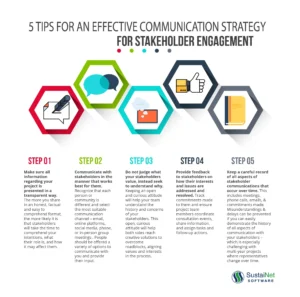

| Four Key Security Steps | Visibility, least privilege, limiting sensitive information, and monitoring behavior are crucial. |

Summary

Agentic AI is revolutionizing the business landscape, but it also brings significant cybersecurity challenges that organizations must address. As businesses increasingly rely on autonomous AI systems, the need for robust security measures becomes paramount. By maximizing visibility, applying the principle of least privilege, limiting access to sensitive information, and proactively monitoring for suspicious behavior, companies can safeguard their operations against potential threats. The balance between leveraging the advantages of agentic AI and mitigating its risks will define the future of secure AI deployment.